はじめに

1年半ほど前に、S3からwasabiへの移行で、wasabiへの移行を実施したが、現在の用途と合っておらずコスト的に Cloudflare R2がよさそうだと思ったので移行を行う。

環境

Windows 11 Professional

WSL2 Ubuntu24.04 LTS移行のきっかけ

- 料金を少しでも安くしたい

- Egress料金がゼロのものが良い

上記2点の理由でクラウドストレージを移行することにした。

ちなみに、Cloudflare R2を知ったきっかけは X (旧: twitter)だ。

※元々移行する気はなかったのだが、Cloudflare R2を調べていくと、自分の要件によりあっていたので移行することにした。

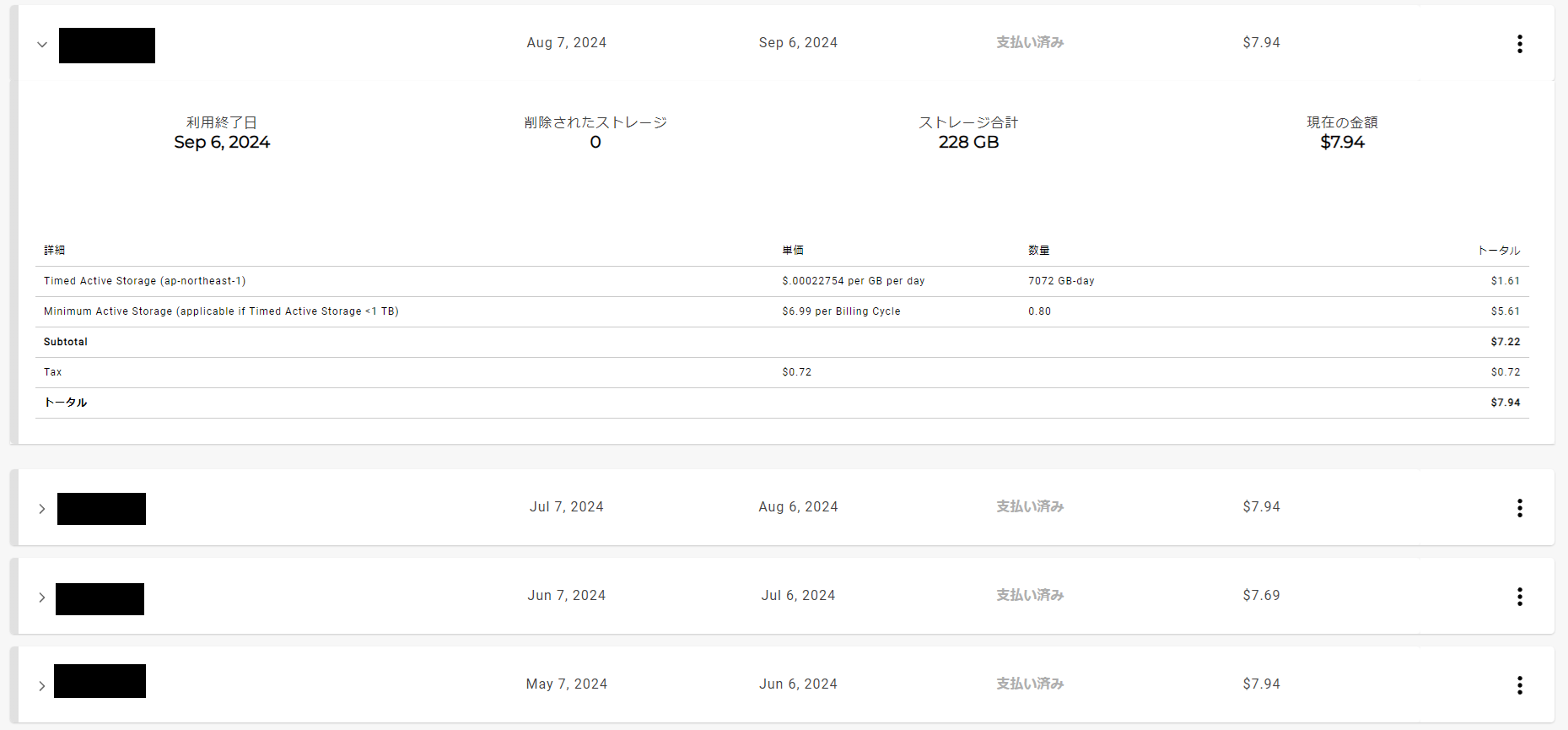

wasabiの使用状況

wasabiでの料金

料金比較

Cloudflare r2

https://www.cloudflare.com/ja-jp/developer-platform/r2/wasabi

https://wasabi.com/ja/pricing https://wasabi.com/pricing/faq

Wasabiの料金は、実際に使用しているストレージ容量ではなく、最低1TBのアクティブストレージに対して課金される点が特徴となっている。

つまり、ストレージ使用量が1TB未満の場合でも1TB分の料金が発生するので、現在自分が保管しているストレージ容量は1TB未満なのでその分不利になっている。

以下に、現在の容量ベースで料金比較をしてみたので記載する。

ストレージ容量が228GBの場合の計算

1. Cloudflare R2

- ストレージ料金: $0.015/GB

- ストレージ使用量: 228GB

- 料金計算: 228GB × $0.015 = $3.42/月

2. Wasabi

- 最低アクティブストレージ: 1TB(1,000GB)

- 料金計算: 1TB × $0.0068 = $6.80/月

228GB使用時の料金比較

| 項目 | Cloudflare R2 | Wasabi |

|---|---|---|

| ストレージ料金 | $3.42/月 | $6.80/月 |

| データ転送料金 | 無料 (CDN経由) / $0.015/GB | 無料 |

| リクエスト料金 | $0.36/1,000 GETリクエスト | 無料 |

| 備考 | 実際の使用量に基づく料金 | 最低1TBに対して課金される |

となっている。

方法

- rcloneを利用する。

- 手動で

wasabi→Cloudflare R2へファイルを送る。 Cloudflareで用意されているR2へのデータ移行ドキュメントに記載の方法で実践する。

→ 対応ストレージにwasabiがないようなので検討からは外した。(もしかしたらできるのかもしれないがrcloneでよいかなと思ったので)

今回も前回と同様に rcloneを利用することとした。rcloneは Cloudflare R2とwasabiどちらも対応しているのでスムーズに移行が可能かと考えたため。

移行のための準備

Cloudflareアカウントの作成

※すでにアカウントは作成済みのためスキップ

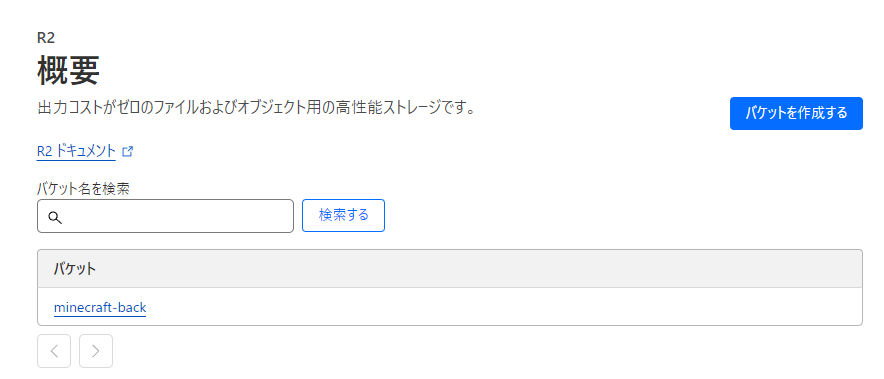

Cloudflare R2のサブスクリプションを追加する

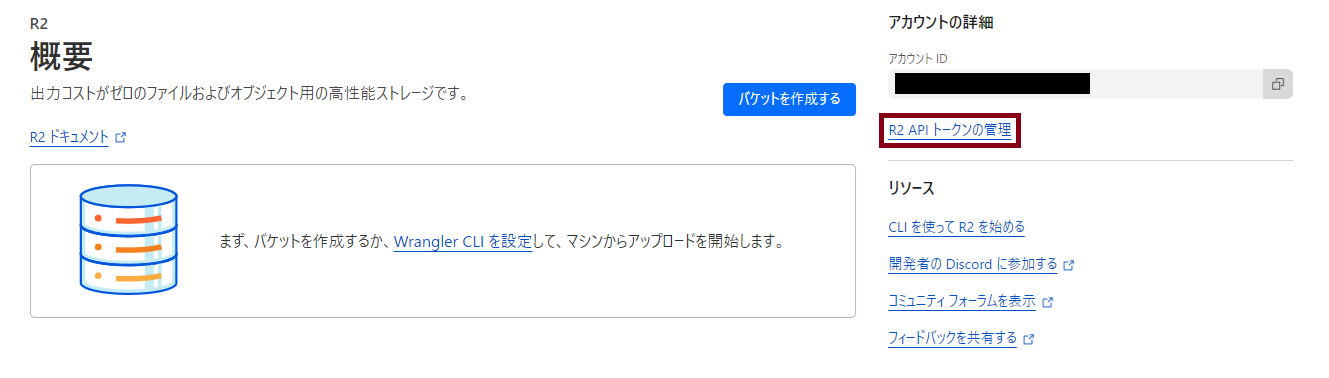

- サイドメニューより「R2」→「概要」を選択する。

- 表示ページの「R2サブスクリプションをアカウントに追加する」を選択する。

Cloudflare R2の概要の画面が表示されれば完了

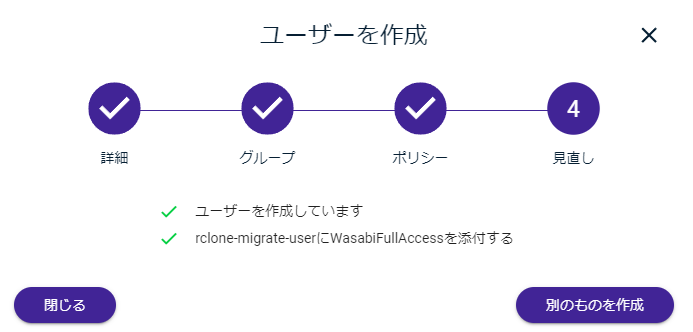

wasabi側の準備 (ユーザの作成+アクセスキーの取得)

rcloneで利用するユーザの作成を行う。

コンソール画面より、「ユーザー」から「ユーザーを作成」を選択する。

ユーザ名を入力する。

ここでは「rclone-migrate-user」とし、アクセスの種類は「プログラム(APIキーを作成)」をチェックする。

グループの割り当ては特に何も行わずに、「次」を選択する。

ポリシーに「

WasabiFullAccess」を選択し、「次」を選択する。完了画面で確認を行い、「ユーザーを作成」を選択する。

- アクセスキーが表示されるので控えておく。

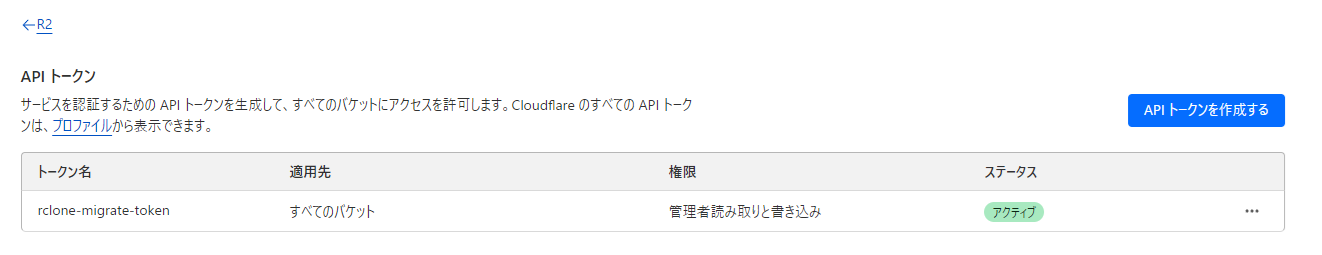

Cloudflare R2側の準備(APIトークンの作成+アクセスキーの取得)

- サイドメニューの「R2」→「概要」を選択し、「R2 APIトークンの管理」を選択する。

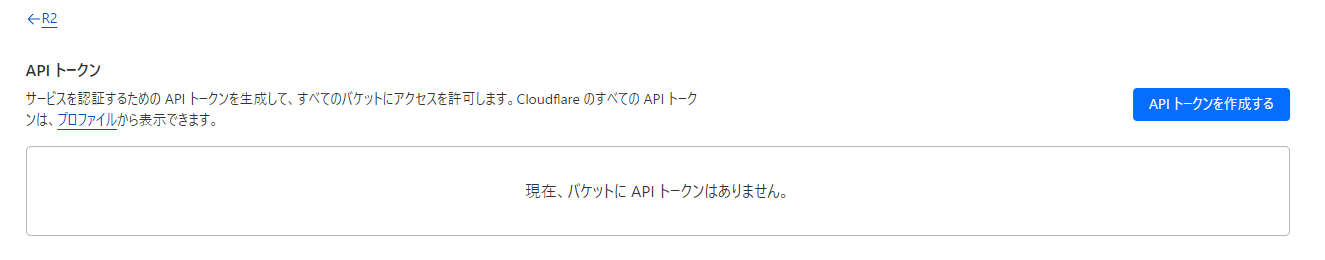

- 「APIトークンを作成する」を選択する。

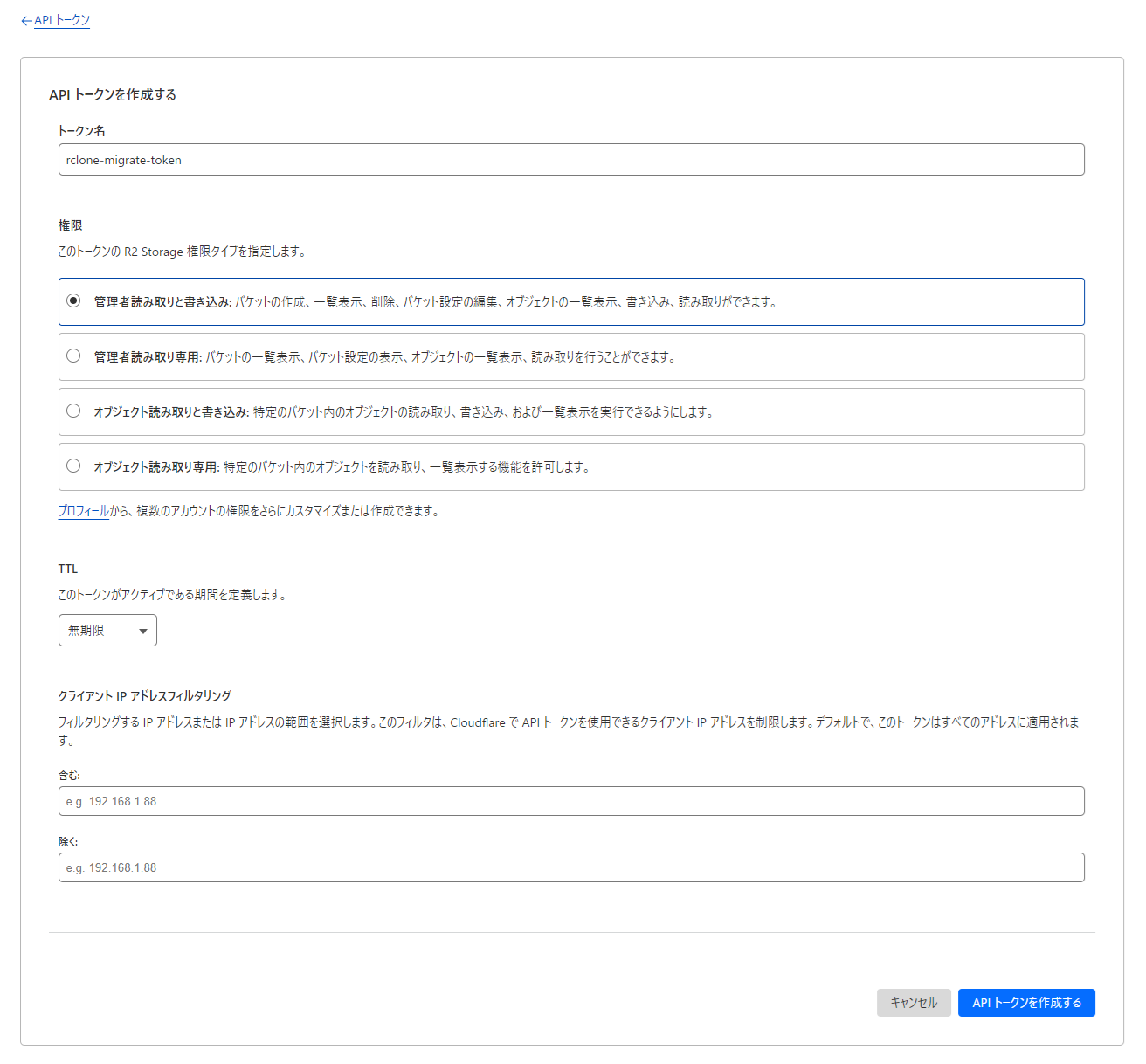

- トークン名と、権限を設定し、「APIトークンを作成する」を選択する。

トークン名: rclone-migrate-token

権限: 管理者読み取りと書き込み

とする。

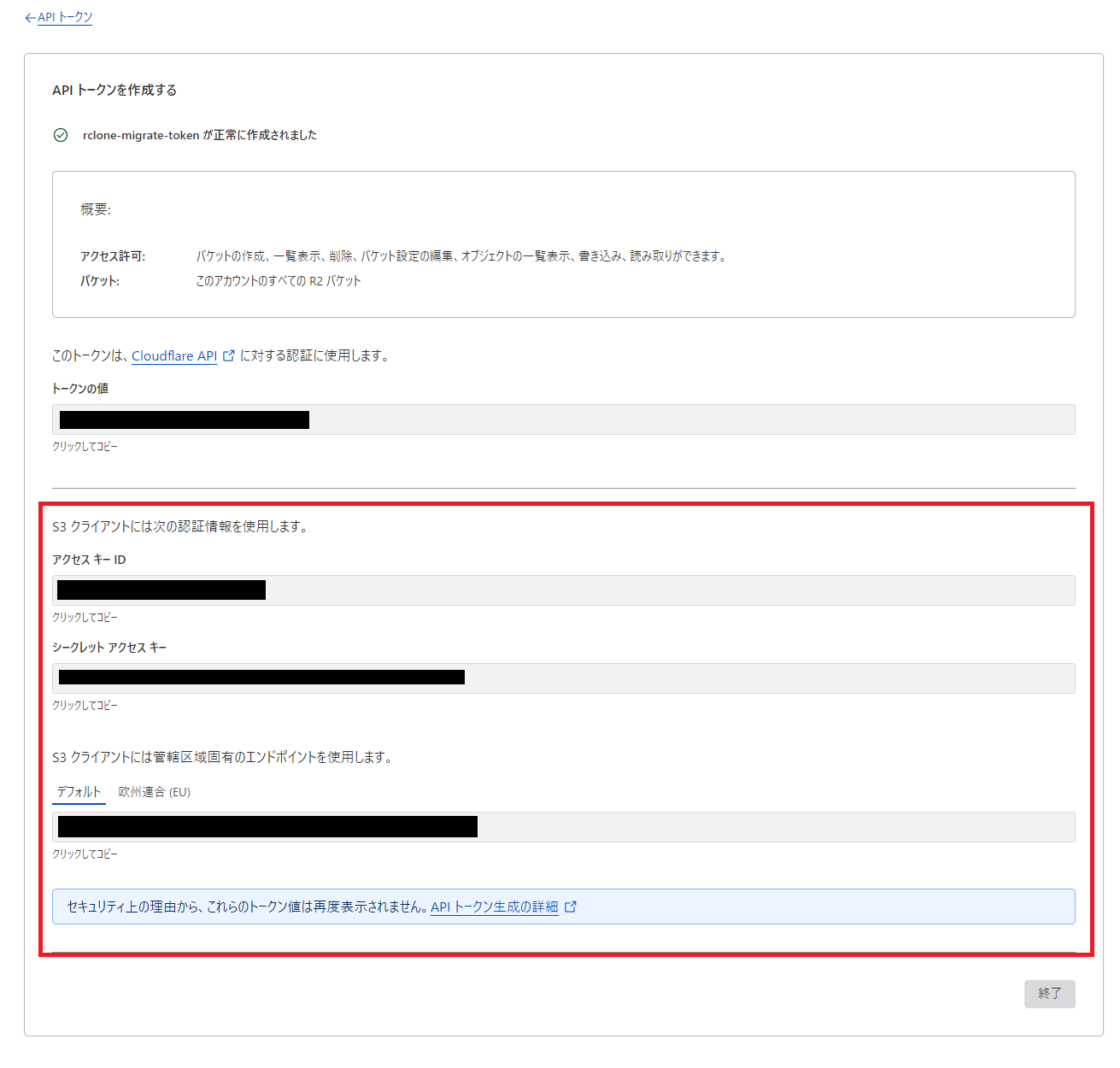

- トークン作成完了画面が表示されるので、S3の認証情報として、「アクセスキーID」「シークレットアクセスキー」「エンドポイント」を控えておく。

- APIトークン一覧に作成したトークンが表示されていることを確認する。

移行

rcloneをインストールする

今回は前回と異なり、WSL2のUbuntu24.04 LTS内に rcloneをインストールする形式とする。

参考ページ: https://rclone.org/install/

- スクリプトでインストールをする。

sudo -v ; curl https://rclone.org/install.sh | sudo bashログ

~ sudo -v ; curl https://rclone.org/install.sh | sudo bash ✔ │ 06:59:17

[sudo] password for kbushi:

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4734 100 4734 0 0 5826 0 --:--:-- --:--:-- --:--:-- 5822

None of the supported tools for extracting zip archives (unzip 7z busybox) were found. Please install one of them and try again.unzip, 7z系のzip解凍ツールがないようなのでいれる。

sudo apt-get install unzipログ

~ sudo apt-get install unzip 100 х │ 07:00:58

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

Suggested packages:

zip

The following NEW packages will be installed:

unzip

0 upgraded, 1 newly installed, 0 to remove and 9 not upgraded.

Need to get 175 kB of archives.

After this operation, 384 kB of additional disk space will be used.

Get:1 http://archive.ubuntu.com/ubuntu noble/main amd64 unzip amd64 6.0-28ubuntu4 [175 kB]

Fetched 175 kB in 1s (124 kB/s)

Selecting previously unselected package unzip.

(Reading database ... 48685 files and directories currently installed.)

Preparing to unpack .../unzip_6.0-28ubuntu4_amd64.deb ...

Unpacking unzip (6.0-28ubuntu4) ...

Setting up unzip (6.0-28ubuntu4) ...

Processing triggers for man-db (2.12.0-4build2) ...改めてスクリプトインストールのコマンドを実行する。

ログ

~ sudo -v ; curl https://rclone.org/install.sh | sudo bash ✔ │ 07:01:07

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4734 100 4734 0 0 5575 0 --:--:-- --:--:-- --:--:-- 5569

Archive: rclone-current-linux-amd64.zip

creating: tmp_unzip_dir_for_rclone/rclone-v1.68.0-linux-amd64/

inflating: tmp_unzip_dir_for_rclone/rclone-v1.68.0-linux-amd64/README.txt [text]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.68.0-linux-amd64/README.html [text]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.68.0-linux-amd64/rclone.1 [text]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.68.0-linux-amd64/rclone [binary]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.68.0-linux-amd64/git-log.txt [text]

Purging old database entries in /usr/share/man...

Processing manual pages under /usr/share/man...

Checking for stray cats under /usr/share/man...

Checking for stray cats under /var/cache/man...

Purging old database entries in /usr/share/man/tr...

Processing manual pages under /usr/share/man/tr...

Checking for stray cats under /usr/share/man/tr...

Checking for stray cats under /var/cache/man/tr...

Purging old database entries in /usr/share/man/fi...

Processing manual pages under /usr/share/man/fi...

Checking for stray cats under /usr/share/man/fi...

Checking for stray cats under /var/cache/man/fi...

Purging old database entries in /usr/share/man/cs...

Processing manual pages under /usr/share/man/cs...

Checking for stray cats under /usr/share/man/cs...

Checking for stray cats under /var/cache/man/cs...

Purging old database entries in /usr/share/man/da...

Processing manual pages under /usr/share/man/da...

Checking for stray cats under /usr/share/man/da...

Checking for stray cats under /var/cache/man/da...

Purging old database entries in /usr/share/man/ko...

Processing manual pages under /usr/share/man/ko...

Checking for stray cats under /usr/share/man/ko...

Checking for stray cats under /var/cache/man/ko...

Purging old database entries in /usr/share/man/uk...

Processing manual pages under /usr/share/man/uk...

Checking for stray cats under /usr/share/man/uk...

Checking for stray cats under /var/cache/man/uk...

Purging old database entries in /usr/share/man/nl...

Processing manual pages under /usr/share/man/nl...

Checking for stray cats under /usr/share/man/nl...

Checking for stray cats under /var/cache/man/nl...

Purging old database entries in /usr/share/man/sr...

Processing manual pages under /usr/share/man/sr...

Checking for stray cats under /usr/share/man/sr...

Checking for stray cats under /var/cache/man/sr...

Purging old database entries in /usr/share/man/zh_CN...

Processing manual pages under /usr/share/man/zh_CN...

Checking for stray cats under /usr/share/man/zh_CN...

Checking for stray cats under /var/cache/man/zh_CN...

Purging old database entries in /usr/share/man/ro...

Processing manual pages under /usr/share/man/ro...

Checking for stray cats under /usr/share/man/ro...

Checking for stray cats under /var/cache/man/ro...

Purging old database entries in /usr/share/man/ja...

Processing manual pages under /usr/share/man/ja...

Checking for stray cats under /usr/share/man/ja...

Checking for stray cats under /var/cache/man/ja...

Purging old database entries in /usr/share/man/ru...

Processing manual pages under /usr/share/man/ru...

Checking for stray cats under /usr/share/man/ru...

Checking for stray cats under /var/cache/man/ru...

Purging old database entries in /usr/share/man/de...

Processing manual pages under /usr/share/man/de...

Checking for stray cats under /usr/share/man/de...

Checking for stray cats under /var/cache/man/de...

Purging old database entries in /usr/share/man/pl...

Processing manual pages under /usr/share/man/pl...

Checking for stray cats under /usr/share/man/pl...

Checking for stray cats under /var/cache/man/pl...

Purging old database entries in /usr/share/man/hu...

Processing manual pages under /usr/share/man/hu...

Checking for stray cats under /usr/share/man/hu...

Checking for stray cats under /var/cache/man/hu...

Purging old database entries in /usr/share/man/fr...

Processing manual pages under /usr/share/man/fr...

Checking for stray cats under /usr/share/man/fr...

Checking for stray cats under /var/cache/man/fr...

Purging old database entries in /usr/share/man/pt_BR...

Processing manual pages under /usr/share/man/pt_BR...

Checking for stray cats under /usr/share/man/pt_BR...

Checking for stray cats under /var/cache/man/pt_BR...

Purging old database entries in /usr/share/man/sl...

Processing manual pages under /usr/share/man/sl...

Checking for stray cats under /usr/share/man/sl...

Checking for stray cats under /var/cache/man/sl...

Purging old database entries in /usr/share/man/es...

Processing manual pages under /usr/share/man/es...

Checking for stray cats under /usr/share/man/es...

Checking for stray cats under /var/cache/man/es...

Purging old database entries in /usr/share/man/sv...

Processing manual pages under /usr/share/man/sv...

Checking for stray cats under /usr/share/man/sv...

Checking for stray cats under /var/cache/man/sv...

Purging old database entries in /usr/share/man/hr...

Processing manual pages under /usr/share/man/hr...

Checking for stray cats under /usr/share/man/hr...

Checking for stray cats under /var/cache/man/hr...

Purging old database entries in /usr/share/man/pt...

Processing manual pages under /usr/share/man/pt...

Checking for stray cats under /usr/share/man/pt...

Checking for stray cats under /var/cache/man/pt...

Purging old database entries in /usr/share/man/it...

Processing manual pages under /usr/share/man/it...

Checking for stray cats under /usr/share/man/it...

Checking for stray cats under /var/cache/man/it...

Purging old database entries in /usr/share/man/zh_TW...

Processing manual pages under /usr/share/man/zh_TW...

Checking for stray cats under /usr/share/man/zh_TW...

Checking for stray cats under /var/cache/man/zh_TW...

Purging old database entries in /usr/share/man/id...

Processing manual pages under /usr/share/man/id...

Checking for stray cats under /usr/share/man/id...

Checking for stray cats under /var/cache/man/id...

Processing manual pages under /usr/local/man...

Updating index cache for path `/usr/local/man/man1'. Wait...done.

Checking for stray cats under /usr/local/man...

Checking for stray cats under /var/cache/man/oldlocal...

1 man subdirectory contained newer manual pages.

1 manual page was added.

0 stray cats were added.

0 old database entries were purged.

rclone v1.68.0 has successfully installed.

Now run "rclone config" for setup. Check https://rclone.org/docs/ for more details.rcloneコマンドが実行できることを確認する。

rclone versionログ

rclone v1.68.0

- os/version: ubuntu 24.04 (64 bit)

- os/kernel: 5.15.153.1-microsoft-standard-WSL2 (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.23.1

- go/linking: static

- go/tags: nonercloneの設定をする

- 参考

wasabi側の設定 https://rclone.org/s3/#wasabiCloudflare R2側の設定 https://rclone.org/s3/#cloudflare-r2

wasabiの設定

rclone configコマンドを実行する。

rclone config設定内容

2024/09/23 07:10:42 NOTICE: Config file "/home/kbushi/.config/rclone/rclone.conf" not found - using defaults

No remotes found, make a new one?

# 「n」と入力

n) New remote

s) Set configuration password

q) Quit config

n/s/q> n

# 「wasabi」と入力

Enter name for new remote.

name> wasabi

# 「s3」と入力

Option Storage.

Type of storage to configure.

Choose a number from below, or type in your own value.

1 / 1Fichier

\ (fichier)

2 / Akamai NetStorage

\ (netstorage)

3 / Alias for an existing remote

\ (alias)

4 / Amazon S3 Compliant Storage Providers including AWS, Alibaba, ArvanCloud, Ceph, ChinaMobile, Cloudflare, DigitalOcean, Dreamhost, GCS, HuaweiOBS, IBMCOS, IDrive, IONOS, LyveCloud, Leviia, Liara, Linode, Magalu, Minio, Netease, Petabox, RackCorp, Rclone, Scaleway, SeaweedFS, StackPath, Storj, Synology, TencentCOS, Wasabi, Qiniu and others

\ (s3)

5 / Backblaze B2

\ (b2)

6 / Better checksums for other remotes

\ (hasher)

7 / Box

\ (box)

8 / Cache a remote

\ (cache)

9 / Citrix Sharefile

\ (sharefile)

10 / Combine several remotes into one

\ (combine)

11 / Compress a remote

\ (compress)

12 / Dropbox

\ (dropbox)

13 / Encrypt/Decrypt a remote

\ (crypt)

14 / Enterprise File Fabric

\ (filefabric)

15 / FTP

\ (ftp)

16 / Files.com

\ (filescom)

17 / Gofile

\ (gofile)

18 / Google Cloud Storage (this is not Google Drive)

\ (google cloud storage)

19 / Google Drive

\ (drive)

20 / Google Photos

\ (google photos)

21 / HTTP

\ (http)

22 / Hadoop distributed file system

\ (hdfs)

23 / HiDrive

\ (hidrive)

24 / ImageKit.io

\ (imagekit)

25 / In memory object storage system.

\ (memory)

26 / Internet Archive

\ (internetarchive)

27 / Jottacloud

\ (jottacloud)

28 / Koofr, Digi Storage and other Koofr-compatible storage providers

\ (koofr)

29 / Linkbox

\ (linkbox)

30 / Local Disk

\ (local)

31 / Mail.ru Cloud

\ (mailru)

32 / Mega

\ (mega)

33 / Microsoft Azure Blob Storage

\ (azureblob)

34 / Microsoft Azure Files

\ (azurefiles)

35 / Microsoft OneDrive

\ (onedrive)

36 / OpenDrive

\ (opendrive)

37 / OpenStack Swift (Rackspace Cloud Files, Blomp Cloud Storage, Memset Memstore, OVH)

\ (swift)

38 / Oracle Cloud Infrastructure Object Storage

\ (oracleobjectstorage)

39 / Pcloud

\ (pcloud)

40 / PikPak

\ (pikpak)

41 / Pixeldrain Filesystem

\ (pixeldrain)

42 / Proton Drive

\ (protondrive)

43 / Put.io

\ (putio)

44 / QingCloud Object Storage

\ (qingstor)

45 / Quatrix by Maytech

\ (quatrix)

46 / SMB / CIFS

\ (smb)

47 / SSH/SFTP

\ (sftp)

48 / Sia Decentralized Cloud

\ (sia)

49 / Storj Decentralized Cloud Storage

\ (storj)

50 / Sugarsync

\ (sugarsync)

51 / Transparently chunk/split large files

\ (chunker)

52 / Uloz.to

\ (ulozto)

53 / Union merges the contents of several upstream fs

\ (union)

54 / Uptobox

\ (uptobox)

55 / WebDAV

\ (webdav)

56 / Yandex Disk

\ (yandex)

57 / Zoho

\ (zoho)

58 / premiumize.me

\ (premiumizeme)

59 / seafile

\ (seafile)

Storage> s3

# 「30」と入力

Option provider.

Choose your S3 provider.

Choose a number from below, or type in your own value.

Press Enter to leave empty.

1 / Amazon Web Services (AWS) S3

\ (AWS)

2 / Alibaba Cloud Object Storage System (OSS) formerly Aliyun

\ (Alibaba)

3 / Arvan Cloud Object Storage (AOS)

\ (ArvanCloud)

4 / Ceph Object Storage

\ (Ceph)

5 / China Mobile Ecloud Elastic Object Storage (EOS)

\ (ChinaMobile)

6 / Cloudflare R2 Storage

\ (Cloudflare)

7 / DigitalOcean Spaces

\ (DigitalOcean)

8 / Dreamhost DreamObjects

\ (Dreamhost)

9 / Google Cloud Storage

\ (GCS)

10 / Huawei Object Storage Service

\ (HuaweiOBS)

11 / IBM COS S3

\ (IBMCOS)

12 / IDrive e2

\ (IDrive)

13 / IONOS Cloud

\ (IONOS)

14 / Seagate Lyve Cloud

\ (LyveCloud)

15 / Leviia Object Storage

\ (Leviia)

16 / Liara Object Storage

\ (Liara)

17 / Linode Object Storage

\ (Linode)

18 / Magalu Object Storage

\ (Magalu)

19 / Minio Object Storage

\ (Minio)

20 / Netease Object Storage (NOS)

\ (Netease)

21 / Petabox Object Storage

\ (Petabox)

22 / RackCorp Object Storage

\ (RackCorp)

23 / Rclone S3 Server

\ (Rclone)

24 / Scaleway Object Storage

\ (Scaleway)

25 / SeaweedFS S3

\ (SeaweedFS)

26 / StackPath Object Storage

\ (StackPath)

27 / Storj (S3 Compatible Gateway)

\ (Storj)

28 / Synology C2 Object Storage

\ (Synology)

29 / Tencent Cloud Object Storage (COS)

\ (TencentCOS)

30 / Wasabi Object Storage

\ (Wasabi)

31 / Qiniu Object Storage (Kodo)

\ (Qiniu)

32 / Any other S3 compatible provider

\ (Other)

provider> 30

# 「1」と入力

Option env_auth.

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Choose a number from below, or type in your own boolean value (true or false).

Press Enter for the default (false).

1 / Enter AWS credentials in the next step.

\ (false)

2 / Get AWS credentials from the environment (env vars or IAM).

\ (true)

env_auth> 1

# wasabiのアクセスキーを入力

Option access_key_id.

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a value. Press Enter to leave empty.

access_key_id> [YOUR_ACCESS_KEY_ID]

# wasabiのシークレットアクセスキーを入力

Option secret_access_key.

AWS Secret Access Key (password).

Leave blank for anonymous access or runtime credentials.

Enter a value. Press Enter to leave empty.

secret_access_key> [YOUR_SECRET_ACCESS_KEY]

# 「ap-northeast-1」と入力

Option region.

Region to connect to.

Leave blank if you are using an S3 clone and you don't have a region.

Choose a number from below, or type in your own value.

Press Enter to leave empty.

/ Use this if unsure.

1 | Will use v4 signatures and an empty region.

\ ()

/ Use this only if v4 signatures don't work.

2 | E.g. pre Jewel/v10 CEPH.

\ (other-v2-signature)

region> ap-northeast-1

# 「10」と入力

Option endpoint.

Endpoint for S3 API.

Required when using an S3 clone.

Choose a number from below, or type in your own value.

Press Enter to leave empty.

1 / Wasabi US East 1 (N. Virginia)

\ (s3.wasabisys.com)

2 / Wasabi US East 2 (N. Virginia)

\ (s3.us-east-2.wasabisys.com)

3 / Wasabi US Central 1 (Texas)

\ (s3.us-central-1.wasabisys.com)

4 / Wasabi US West 1 (Oregon)

\ (s3.us-west-1.wasabisys.com)

5 / Wasabi CA Central 1 (Toronto)

\ (s3.ca-central-1.wasabisys.com)

6 / Wasabi EU Central 1 (Amsterdam)

\ (s3.eu-central-1.wasabisys.com)

7 / Wasabi EU Central 2 (Frankfurt)

\ (s3.eu-central-2.wasabisys.com)

8 / Wasabi EU West 1 (London)

\ (s3.eu-west-1.wasabisys.com)

9 / Wasabi EU West 2 (Paris)

\ (s3.eu-west-2.wasabisys.com)

10 / Wasabi AP Northeast 1 (Tokyo) endpoint

\ (s3.ap-northeast-1.wasabisys.com)

11 / Wasabi AP Northeast 2 (Osaka) endpoint

\ (s3.ap-northeast-2.wasabisys.com)

12 / Wasabi AP Southeast 1 (Singapore)

\ (s3.ap-southeast-1.wasabisys.com)

13 / Wasabi AP Southeast 2 (Sydney)

\ (s3.ap-southeast-2.wasabisys.com)

endpoint> 10

# 「ap-northeast-1」と入力

Option location_constraint.

Location constraint - must be set to match the Region.

Leave blank if not sure. Used when creating buckets only.

Enter a value. Press Enter to leave empty.

location_constraint> ap-northeast-1

# 「1」と入力

Option acl.

Canned ACL used when creating buckets and storing or copying objects.

This ACL is used for creating objects and if bucket_acl isn't set, for creating buckets too.

For more info visit https://docs.aws.amazon.com/AmazonS3/latest/dev/acl-overview.html#canned-acl

Note that this ACL is applied when server-side copying objects as S3

doesn't copy the ACL from the source but rather writes a fresh one.

If the acl is an empty string then no X-Amz-Acl: header is added and

the default (private) will be used.

Choose a number from below, or type in your own value.

Press Enter to leave empty.

/ Owner gets FULL_CONTROL.

1 | No one else has access rights (default).

\ (private)

/ Owner gets FULL_CONTROL.

2 | The AllUsers group gets READ access.

\ (public-read)

/ Owner gets FULL_CONTROL.

3 | The AllUsers group gets READ and WRITE access.

| Granting this on a bucket is generally not recommended.

\ (public-read-write)

/ Owner gets FULL_CONTROL.

4 | The AuthenticatedUsers group gets READ access.

\ (authenticated-read)

/ Object owner gets FULL_CONTROL.

5 | Bucket owner gets READ access.

| If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ (bucket-owner-read)

/ Both the object owner and the bucket owner get FULL_CONTROL over the object.

6 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ (bucket-owner-full-control)

acl> 1

# 「n」と入力

Edit advanced config?

y) Yes

n) No (default)

y/n> n

Configuration complete.

Options:

- type: s3

- provider: Wasabi

- access_key_id: [YOUR_ACCESS_KEY_ID]

- secret_access_key: [YOUR_SECRET_ACCESS_KEY]

- region: ap-northeast-1

- endpoint: s3.ap-northeast-1.wasabisys.com

- location_constraint: ap-northeast-1

- acl: private

Keep this "wasabi" remote?

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d> y

Current remotes:

Name Type

==== ====

wasabi s3

# 「q」で抜ける

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> qCloudflare R2の設定

rclone configの設定

rclone config設定内容

Current remotes:

Name Type

==== ====

wasabi s3

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> n

Enter name for new remote.

name> r2

Option Storage.

Type of storage to configure.

Choose a number from below, or type in your own value.

1 / 1Fichier

\ (fichier)

2 / Akamai NetStorage

\ (netstorage)

3 / Alias for an existing remote

\ (alias)

4 / Amazon S3 Compliant Storage Providers including AWS, Alibaba, ArvanCloud, Ceph, ChinaMobile, Cloudflare, DigitalOcean, Dreamhost, GCS, HuaweiOBS, IBMCOS, IDrive, IONOS, LyveCloud, Leviia, Liara, Linode, Magalu, Minio, Netease, Petabox, RackCorp, Rclone, Scaleway, SeaweedFS, StackPath, Storj, Synology, TencentCOS, Wasabi, Qiniu and others

\ (s3)

5 / Backblaze B2

\ (b2)

6 / Better checksums for other remotes

\ (hasher)

7 / Box

\ (box)

8 / Cache a remote

\ (cache)

9 / Citrix Sharefile

\ (sharefile)

10 / Combine several remotes into one

\ (combine)

11 / Compress a remote

\ (compress)

12 / Dropbox

\ (dropbox)

13 / Encrypt/Decrypt a remote

\ (crypt)

14 / Enterprise File Fabric

\ (filefabric)

15 / FTP

\ (ftp)

16 / Files.com

\ (filescom)

17 / Gofile

\ (gofile)

18 / Google Cloud Storage (this is not Google Drive)

\ (google cloud storage)

19 / Google Drive

\ (drive)

20 / Google Photos

\ (google photos)

21 / HTTP

\ (http)

22 / Hadoop distributed file system

\ (hdfs)

23 / HiDrive

\ (hidrive)

24 / ImageKit.io

\ (imagekit)

25 / In memory object storage system.

\ (memory)

26 / Internet Archive

\ (internetarchive)

27 / Jottacloud

\ (jottacloud)

28 / Koofr, Digi Storage and other Koofr-compatible storage providers

\ (koofr)

29 / Linkbox

\ (linkbox)

30 / Local Disk

\ (local)

31 / Mail.ru Cloud

\ (mailru)

32 / Mega

\ (mega)

33 / Microsoft Azure Blob Storage

\ (azureblob)

34 / Microsoft Azure Files

\ (azurefiles)

35 / Microsoft OneDrive

\ (onedrive)

36 / OpenDrive

\ (opendrive)

37 / OpenStack Swift (Rackspace Cloud Files, Blomp Cloud Storage, Memset Memstore, OVH)

\ (swift)

38 / Oracle Cloud Infrastructure Object Storage

\ (oracleobjectstorage)

39 / Pcloud

\ (pcloud)

40 / PikPak

\ (pikpak)

41 / Pixeldrain Filesystem

\ (pixeldrain)

42 / Proton Drive

\ (protondrive)

43 / Put.io

\ (putio)

44 / QingCloud Object Storage

\ (qingstor)

45 / Quatrix by Maytech

\ (quatrix)

46 / SMB / CIFS

\ (smb)

47 / SSH/SFTP

\ (sftp)

48 / Sia Decentralized Cloud

\ (sia)

49 / Storj Decentralized Cloud Storage

\ (storj)

50 / Sugarsync

\ (sugarsync)

51 / Transparently chunk/split large files

\ (chunker)

52 / Uloz.to

\ (ulozto)

53 / Union merges the contents of several upstream fs

\ (union)

54 / Uptobox

\ (uptobox)

55 / WebDAV

\ (webdav)

56 / Yandex Disk

\ (yandex)

57 / Zoho

\ (zoho)

58 / premiumize.me

\ (premiumizeme)

59 / seafile

\ (seafile)

Storage> s3

Option provider.

Choose your S3 provider.

Choose a number from below, or type in your own value.

Press Enter to leave empty.

1 / Amazon Web Services (AWS) S3

\ (AWS)

2 / Alibaba Cloud Object Storage System (OSS) formerly Aliyun

\ (Alibaba)

3 / Arvan Cloud Object Storage (AOS)

\ (ArvanCloud)

4 / Ceph Object Storage

\ (Ceph)

5 / China Mobile Ecloud Elastic Object Storage (EOS)

\ (ChinaMobile)

6 / Cloudflare R2 Storage

\ (Cloudflare)

7 / DigitalOcean Spaces

\ (DigitalOcean)

8 / Dreamhost DreamObjects

\ (Dreamhost)

9 / Google Cloud Storage

\ (GCS)

10 / Huawei Object Storage Service

\ (HuaweiOBS)

11 / IBM COS S3

\ (IBMCOS)

12 / IDrive e2

\ (IDrive)

13 / IONOS Cloud

\ (IONOS)

14 / Seagate Lyve Cloud

\ (LyveCloud)

15 / Leviia Object Storage

\ (Leviia)

16 / Liara Object Storage

\ (Liara)

17 / Linode Object Storage

\ (Linode)

18 / Magalu Object Storage

\ (Magalu)

19 / Minio Object Storage

\ (Minio)

20 / Netease Object Storage (NOS)

\ (Netease)

21 / Petabox Object Storage

\ (Petabox)

22 / RackCorp Object Storage

\ (RackCorp)

23 / Rclone S3 Server

\ (Rclone)

24 / Scaleway Object Storage

\ (Scaleway)

25 / SeaweedFS S3

\ (SeaweedFS)

26 / StackPath Object Storage

\ (StackPath)

27 / Storj (S3 Compatible Gateway)

\ (Storj)

28 / Synology C2 Object Storage

\ (Synology)

29 / Tencent Cloud Object Storage (COS)

\ (TencentCOS)

30 / Wasabi Object Storage

\ (Wasabi)

31 / Qiniu Object Storage (Kodo)

\ (Qiniu)

32 / Any other S3 compatible provider

\ (Other)

provider> Cloudflare

Option env_auth.

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Choose a number from below, or type in your own boolean value (true or false).

Press Enter for the default (false).

1 / Enter AWS credentials in the next step.

\ (false)

2 / Get AWS credentials from the environment (env vars or IAM).

\ (true)

env_auth> 1

Option access_key_id.

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a value. Press Enter to leave empty.

access_key_id> [YOUR_ACCESS_KEY_ID]

Option secret_access_key.

AWS Secret Access Key (password).

Leave blank for anonymous access or runtime credentials.

Enter a value. Press Enter to leave empty.

secret_access_key> [YOUR_SECRET_ACCESS_KEY]

Option region.

Region to connect to.

Choose a number from below, or type in your own value.

Press Enter to leave empty.

1 / R2 buckets are automatically distributed across Cloudflare's data centers for low latency.

\ (auto)

region> 1

Option endpoint.

Endpoint for S3 API.

Required when using an S3 clone.

Enter a value. Press Enter to leave empty.

endpoint> [YOUR_ENDPOINT_URL]

Edit advanced config?

y) Yes

n) No (default)

y/n> n

Configuration complete.

Options:

- type: s3

- provider: Cloudflare

- access_key_id: [YOUR_ACCESS_KEY_ID]

- secret_access_key: [YOUR_SECRET_ACCESS_KEY]

- region: auto

- endpoint: [YOUR_ENDPOINT_URL]

Keep this "r2" remote?

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d> y

Current remotes:

Name Type

==== ====

r2 s3

wasabi s3

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> q設定の確認

less ~/.config/rclone/rclone.conf[wasabi]

type = s3

provider = Wasabi

access_key_id = ************************************

secret_access_key = ************************************

region = ap-northeast-1

endpoint = s3.ap-northeast-1.wasabisys.com

location_constraint = ap-northeast-1

acl = private

[r2]

type = s3

provider = Cloudflare

access_key_id = ************************************

secret_access_key = ************************************

region = auto

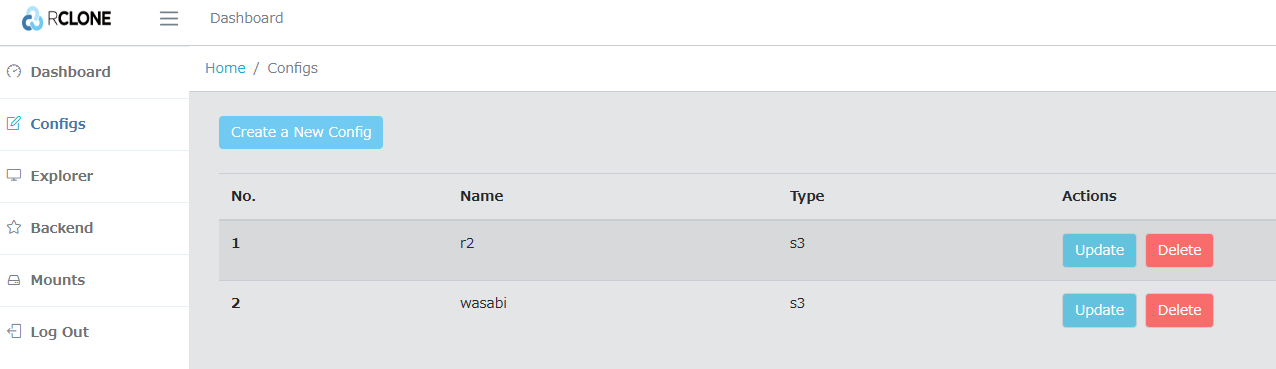

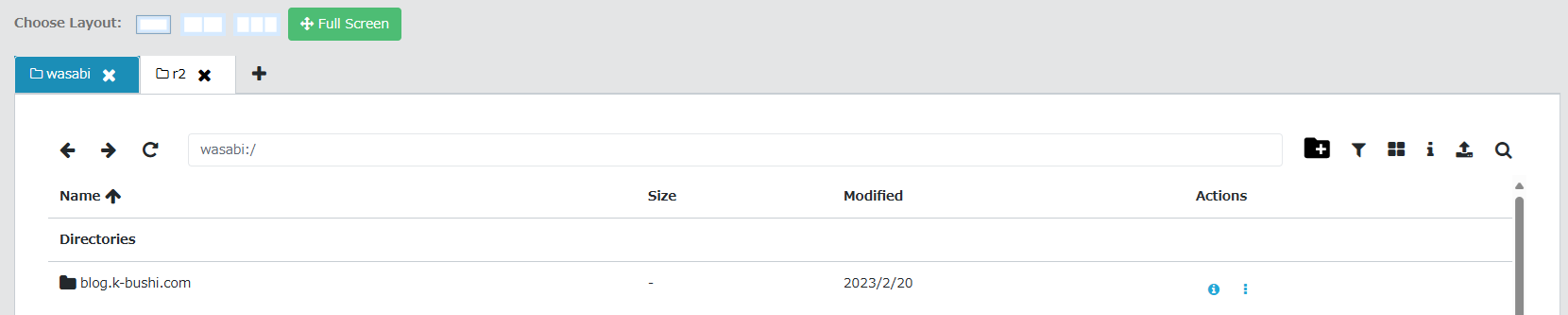

endpoint = https://************************************.r2.cloudflarestorage.comWebUIでの確認

GUI (Experimental) でも確認が可能。

rclone rcd --rc-web-gui※エラーになる模様

2024/09/23 07:34:28 ERROR : Failed to open Web GUI in browser: exec: "xdg-open": executable file not found in $PATH. Manually access it at: [URL]https://github.com/cli/cli/issues/826を見たところ、export BROWSER=wslview を先に設定しておけばよいらしい。

→不要

また、xdg-openがないので、xdg-utilsをインストールする。

参考: https://zenn.dev/kakushina/articles/abaee803f77e1d

sudo apt-get install xdg-utilsインストール後、下記を実行する。

rclone rcd --rc-web-gui --rc-user user --rc-pass pass2024/09/23 07:41:23 NOTICE: Web GUI exists. Update skipped.

2024/09/23 07:41:23 NOTICE: Serving Web GUI

2024/09/23 07:41:23 NOTICE: Serving remote control on http://127.0.0.1:5572/とログが出るので、http://127.0.0.1:5572/ にアクセスをする。

user / pass で認証は通る。

Explorerから各クラウドストレージへアクセスができているのでよさそうだ。

移行の実施

rclone sync [移行元設定名]:[バケット名] [移行先設定名]:[バケット名]の形式で同期をする。

minecraft-backというバケットがあるので下記を実施する。

rclone sync wasabi:minecraft-back r2:minecraft-back暫く待つとコンソールが返ってくるので確認してみる。

というわけで、残りのバケットを移行すれば完了。

※移行時に下記のエラーが発生した。

S3 bucket invest.small-talk: error reading destination root directory: operation error S3: ListObjectsV2, https response error StatusCode: 400, RequestID: , HostID: , api error InvalidBucketName: The specified bucket name is not valid.invest.small-talkというバケット名が良くないようで、

rclone sync wasabi:invest.small-talk r2:invest.small-talk

↓

rclone sync wasabi:invest.small-talk r2:invest-small-talkとしたらうまくいった。

参考

rclone

https://rclone.org/rcloneのGUIモードでbasic認証のID/PASSを設定する方法

https://qiita.com/yugo-yamamoto/items/7fc83ad9991c4e1740b8Cloudflare r2

https://www.cloudflare.com/ja-jp/developer-platform/r2/Cloudflare R2へのデータ移行

https://developers.cloudflare.com/r2/data-migration/npm docsを使うときに少し躓いたこと

https://zenn.dev/kakushina/articles/abaee803f77e1dInvalidBucketName | AWS SimSpace Weaver

https://docs.aws.amazon.com/ja_jp/simspaceweaver/latest/userguide/troubleshooting_bucket-name-too-long.html

おわりに

wasabiからCloudflare R2への移行を行った。

また1年ほど使ってみたいと思う。

pCloudのような買い切りのクラウドストレージのほうが要件に合うようであれば、そちらでもよいかなと思うので、いずれはそれも検討してみたいと思う。

追記

移行が完了したら、wasabiのアカウントを削除しよう。

気づかずに2ヶ月間$7課金されていたので…